Convolutional Neural Networks (CNN)

In deep learning, a convolutional neural network (CNN, or ConvNet) is a class of artificial neural network, most commonly applied to analyze visual imagery. They have applications in image and video recognition, recommender systems, image classification, image segmentation, medical image analysis, natural language processing, brain-computer interfaces, and financial time series.

Transfer Learning

Transfer learning is a research problem in machine learning that focuses on storing knowledge gained while solving one problem and applying it to a different but related problem.

For example, it may be easier and faster for someone who can ride a bicycle to learn to use a scooter than someone who cannot. In these events, which are similar to each other, the person uses the ability to stay in balance while riding a scooter and transfers learning without realizing it.

Transfer learning is the storage of the information obtained while solving a problem and using that information when faced with another problem. With transfer learning, models that show higher success and learn faster with less training data are obtained by using previous knowledge.

The best part about transfer learning is that we can use part of the trained model without having to train the whole model. In this way, we save time with transfer learning.

Dense Convolutional Network (DenseNet)

Dense Convolutional Network (DenseNet) is connects each layer to every other layer in a feed-forward fashion. They alleviate the vanishing-gradient problem, strengthen feature propagation, encourage feature reuse, and substantially reduce the number of parameters.

DenseNet works on the idea that convolutional networks can be substantially deeper, more accurate, and efficient to train if they have shorter connections between layers close to the input and those close to the output. The figure below is from the original paper which gives a nice visualization of scaling.

Note: DenseNet comes in a lot of variants. I used DenseNet-201 because it’s a small model. If you want you can try out other variants of DenseNet.

Let’s get started!

Model Training Using Transfer Learning

In this tutorial, you will learn how to classify images of alien and predator by using transfer learning from a pre-trained network.

A pre-trained model has been previously trained on a dataset and contains the weights and biases that represent the features of whichever dataset it was trained on. You either use the pretrained model as is or use transfer learning to customize this model to a given task. We will use the second method.

Let’s start with the table of contents.

Data — download dataset

Initialize — importing libraries, specifying file paths, defining constant variables

Prepeating the Image — preparing the data set for the model part with various methods

Model — establishing the model and evaluating the results

Connecting to Drive

We need to connect to Drive and go to the directory where our code file is located.

Data

In order to download our dataset, we download a .json file from our kaggle account and specify the path to the downloaded file.

We downloaded the dataset.

We extracted the downloaded dataset from the .zip file.

Initialize

Let us first import the libraries.

Then let’s define the paths of train and validation data.

We have determined the batch size and the number of epochs.

DenseNet-201 model architecture requires the image to be of size (224, 224). So, let us resize our images.

Preparing The Images

We have loaded the train and validation data. Since there are 2 classes in our dataset, we made the label_mode binary.

We printed the number of images in 2 separate files (alien, predator) in the train folder.

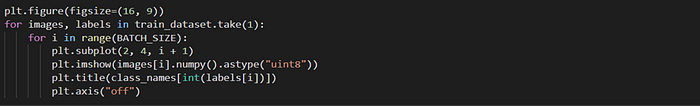

Visualize The Images

Image Augmentation

With Data Augmentation, we increase the number of images in our dataset.

Create Test Dataset

To create a test dataset, we moved 20% of our validation data to the test_dataset variable.

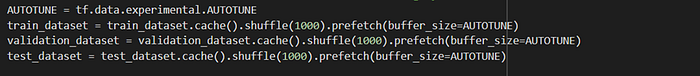

Improvements in Dataset

We cached the datasets to gain speed and shuffled the images to reduce the test error. In this way, we have ensured that we get the maximum efficiency by synchronizing the read-train times.

Visualize Original And Augmented Images

Let’s visualize it to better understand what augmented images look like.

Model

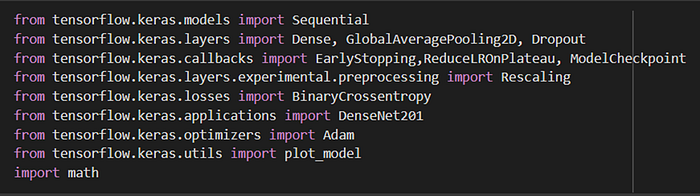

Necessary Importers

The libraries we need to import to create the model.

CNN Architecture

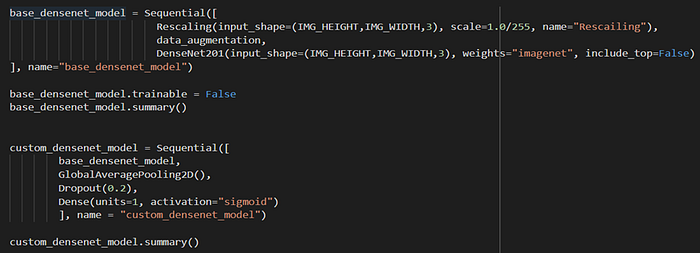

The DenseNet class is available in Keras to help in transfer learning with ease. I used the DenseNet-201 class with ImageNet weights. We rescaled our data set in accordance with the DenseNet model that we will use in feature extraction, and we created our base model by adding the data we created artificially.

By setting the trainable property of this model we created to False, we prevented the weights in non-trainable layers from being updated. Otherwise, what the model learned would be destroyed.

Since I used this model just for feature extraction, I did not include the fully-connected layer at the top of the network instead specified the input shape and pooling. I also added my own pooling and dense layers.

Here is the code to use the pre-trained DenseNet-201 model.

My base model has no trainable parameters because its trainable property is False. There are 1,921 trainable parameters in our 2nd model with dense parts added.

Fit The Model

Here are the snippets of training.

We can see that the model adjusted the learning rate on the 24th epoch and we get a final validation-accuracy of 93.75% on the validation set, which is pretty good. But wait, we need to look at the test accuracy too.

Evaluate

Let’s visualize the loss and accuracy against number of epochs.

We got an accuracy of 89.99% on the test dataset.

Results

Learning transfer is a technique used to enable existing algorithms to achieve higher performance in a shorter time with less data. Although the positive aspects of this method are strong, there are situations that should be considered regarding learning transfer. Transfer learning only works if the initial and target problems are similar enough for the first round of training to be relevant. In such cases, it is thought that the source data and the target data are very different from each other and the problem of negative transfer occurs. If the first round of training is too far off the mark, the model may actually perform worse than if it had never been trained at all. Right now, there are still no clear standards on what types of training are sufficiently related, or how this should be measured.

Deep learning is all about experimentation. You can improve the performance of your model by using a different version of DenseNet-201 or by transfer learning with a completely different model. You can also make major changes to your model by tampering with the hyperparameter tuning.

I hope the blog helped you in understanding how to perform transfer learning on CNN. Please feel free to experiment more to get better performance. You can find the source code at this link.

Reference:

This Kaggle notebook gave me an idea of how to do transfer learning with DenseNet.

This post on Quora guided me as I explored the disadvantages of transfer learning.

CNN and Transfer Learning definitions from Wikipedia.

Thanks everyone for reading this. I hope it was worth your time. Please feel free to share your valuable feedback or suggestion.

Author

Zeynep Aslan

ArtIFICIAL INTELLIGENCE